What is Human Lag?

We all recognise

technological lag : a sluggish webpage load time, poor connection on Zoom, or a delay in mouse response are all instantly recognisable examples that trigger the all-too-familiar feeling of intense frustration. Lag typically results when a constituent of an ecosystem fails to keep in step with its counterparts. In technical terms, there may not be enough bandwidth for an entire ecosystem to operate efficiently, or latency has developed between individual constituents within that ecosystem.

At a societal level, we experience cultural lag

when society lags behind technological innovation. The term cultural lag was coined by William F. Ogburn, an American sociologist, in his book Social Change

, published in 1922. The theory of cultural lag posits that culture takes time to catch up with technological innovation. The intervening period is rife with social discontent and conflict. For example, today, the rapid development of electric cars challenges the social norms around driving skills, traffic rules and ethics of road behaviours, while the supply of lithium and cobalt needed to make batteries is already raising environmental and geopolitical questions.

And, while we are adopting technologies faster than ever before

, a new kind of lag is creeping into our lives impacting the ways we use and benefit from the technology available to us — we call this Human Lag

. Simply put, human lag exists when innovation surpasses adaptation.

Compared to the rapid proliferation of technology, human cognition systems evolve at a relative snail’s pace. The time it takes for us to process, understand

and act

on new information, grows longer as the amount of information we need to sift through increases. In this age of information, our cognition systems are creaking under pressure.

The length of time between when new information is received, and when we can fully take advantage of it also depends on how much mental energy we have available. Unlike modern machines with near-infinite processing power, humans have a finite budget of mental energy to pull from. When we exert cognitive effort, our brain actually consumes calories. In fact, chess grandmasters can burn up to 6,000 calories a day

during tournaments, just by sitting and thinking. And just this month, researchers found

that exerting cognitive effort for long periods of time can lead to a build up of toxic byproducts in the prefrontal cortex, slowing down our cognitive functioning resulting in mental fatigue!

Mental fatigue manifests as a feeling that your brain just won’t function right. People often describe it as brain fog. You can’t concentrate, even simple tasks take forever, and you find yourself rereading the same paragraph or tweaking the same line of code over-and-over again. Things that would have not have bothered you earlier in the day become more irksome as you tire out. You might find yourself impatient with colleagues or friends. Left unchecked, ongoing mental fatigue can result in burnout.

Let’s dig a little deeper into some the underlying causes of human lag.

What's Causing Human Lag?

If IPR > IPC = ⚠️Information Overload ⚠️

Too much information, not enough time, and poor quality of information are all significant contributors to information overload (often synonymised with cognitive overload). When we’re overloaded, we’re less effective. We might seek out easy, low-value tasks, in an effort to feel productive. We may find ourselves paralyzed, not knowing where to begin with a task. Or we might accidentally overlook important detail while overwhelmed.

Our hunger for new information can be insatiable but our ability to focus on a single task for a meaningful amount of time has become more fragile. As Nobel laureate economist Herbert Simon

put it:

Multitasking is also taking its toll on our ability to focus and damaging our productivity. According to

Meyer, Evans and Rubinstein , converging evidence suggests that our executive control processes have two distinct, complementary stages. The first stage is goal shifting (“I want to do this now instead of that”), and the second stage is rule activation (“I’m turning off the rules for that and turning on the rules for this”). Meyer has estimated that even brief mental blocks created by shifting between tasks can cost as much as 40% of someone’s productive time.

What are the consequences of human lag?

1. Cognitive Offloading

Anyone who has ever made a grocery list, taken meeting notes, or used a calendar to keep track of their daily schedule has engaged in cognitive offloading: the process of externally recording thoughts and things to remember in order to reduce cognitive demand. Although it may seem intuitive, cognitive offloading is perhaps one of the most vital techniques used by human memory. The fallibility of our human memory has been well-documented

, and offloading can be a very effective coping strategy in a noisy world, expanding the amount of information that is readily accessible to us.

Although recent technology has made offloading easy and we have become symbiotic with our smartphones and other devices, there are some drawbacks to offloading. For example, Sparrow, Liu, and Wegner (2011) had participants study trivia questions and led them to believe that the studied information would either be saved (offloaded) or would not be stored for later access (not offloaded). Participants who thought they would have access to the stored information later demonstrated worse

memory for the trivia questions than participants who did not rely on technology to store the information.

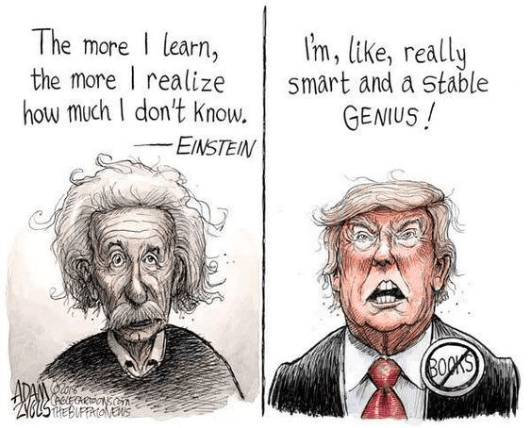

Often referred to as the ‘ Google Effect

’, research also shows that we increasingly rely on the internet as an extension

of our transactive memory. We no longer bother to store information ourselves when we know that we can easily find it again on Google. In fact, a more recent research study found that those of us who can use Google effectively, believe ourselves to be smarter overall. Yikes!

Another recent study found that when we use the internet to search for information, we are worse at recalling that same information at a later stage than if we had found the information another way. So, even in spite of our forgetfulness, we suffer from the illusion of learning and feel much more intelligent after we have done a quick Google search.

2. Learned Helplessness

What happens when technology doesn’t operate as expected? Do you prise open your smartphone to replace the battery or pore over lines of code to track down a software bug? Like many, you might opt to seek expert help. The sleek design of today’s digital devices no longer encourages us to get curious about what lies beneath, while new software rarely comes with instructions never mind access to the underlying code.

Modern technologies signal to us not to tinker. As we internalise these signals, we move from conscious thinking to unconscious acceptance. Searching for a solution becomes too much effort and trying to fix it takes too much time. Eventually, we lose the connection between the problem we’re facing and how to approach a potential solution. It’s not that we don’t know how to solve the problem; the challenge is that we no longer know which questions to ask, what outcome we’re expecting or how to get moving.

Learned helplessness can present in several different ways:

- Having tried and failed yet watched others succeed, we lose confidence.

- Feeling incompetent and unconfident because of failures, we give up trying.

- Accustomed to instant gratification when asking for help, we can’t motivate ourselves to figure it out on our own.

This phenomenon poses challenges not only for users but also for companies who are implementing new tools and services that require more investment from employees as they grow in complexity. The more complex infrastructure gets, the greater the threat posed by learned helplessness.

3. Impaired Decision-Making

“Information overload occurs when the amount of input to a system exceeds its processing capacity. Decision-makers have fairly limited cognitive processing capacity. Consequently, when information overload occurs, it is likely that a reduction in decision quality will occur.”

These days, we have coined a new phrase for this human fallibility — Infoxication. Infoxication refers to the difficulty or impossibility of taking a decision or keeping informed about a particular subject, due to the endless amount of data and content that exists on the web.

When we’re unable to process the full scope of relevant factors required to make a decision, we risk making mistakes. Working with a depleted brain

can cause lack of attention, increased impulsivity, and make it more effortful to combine information and understand the implications. A brain running on low energy makes worse decisions.

In the face of mounting inputs and limited time, we often revert to sub-optimal decision-making strategies:

- Impetuous decision-making

— making instinctive decisions too quickly and without the full facts

- Procrastination

— taking too long to make a decision, to the point it is no longer optimal or the critical decision-point has passed

- Decision paralysis

— failure to take any action of decision due to mental exhaustion and overwhelm.

- Outsource it

— we avoid making the decision altogether and instead outsource it — to friends, colleagues, apps (e.g. Tripadvisor), or discussion boards (e.g. Discord, Slack groups).

4. Reduced Capacity for Learning

Our brains are cleverly adapting to information overload by quickly scanning information to determine if it is is important enough to pay attention to. We speed-read through blogs and articles hunting for a quick nugget to satisfy our thirst for knowledge. Rather than read a book, we snack on soundbites and other digital fast-food. This form of shallow engagement with information not only impacts our ability to think deeply, but also contributes to the illusion of knowledge.

In the world of learning, it’s well understood that overloading our limited processing capacity reduces the effectiveness of learning. In the same way that having too many tabs open in your browser can slow down your computer’s processing, our working memory struggles to process inputs effectively when we’re overwhelmed or confused.

To learn effectively, we need to be able to think clearly — that means our attention, focus, processing speed, and memory are all working effectively. A fractious attention span, poor focus, and a cluttered mind impedes our ability to pass information through to long term memory.

What can we do about Human Lag?

While technological lag is being addressed by upgraded 5G networks, humans are not so easily upgraded (yet!). An unfocused, overwhelmed and fatigued generation could have potentially catastrophic consequences. If we fail to address the underlying causes of human lag, the capacity gap

between technology and humanity will only continue to deepen.

As technology expands and accelerates at a tremendous pace, so do the risks we face. Our human limitations need to be addressed with awareness because they will continue to cause problems if they are ignored, or worse, when they are not accounted for in our design planning. The capacity gap between what we are capable of doing and what we need to do has to be bridged

. We need innovative design thinking that anticipates what is coming next. This is essential for our competitive advantage today, and for our future tomorrow.

I’m excited about starting a conversation about human lag and helping our technology amplify humanity so we don’t fall further behind. My new book

Humology

is

out now

! Connect with me on

LinkedIn

to continue the conversation!